Ever since I saw the film Iron Man I wanted to build a voice controlled personal assistant, similar to Tony Stark's Jarvis. Thanks to the WebSpeech APIs provided by Chrome you can now build something similar in your browser.

So far speech recognition is only available in Chrome. This is why other browsers won't be mentioned in this blogpost.

Making your browser speak – Speech synthesis

var msg = new SpeechSynthesisUtterance('Hello World');

// Config (optional)

msg.voice = 'Google UK English Male';

msg.volume = 1; // 0 -> 1

msg.rate = 1.5; // 0 -> 1

msg.pitch = 1; // 0 -> 2

speechSynthesis.speak(msg);The available voices depend on the Operating System. On OS X, at the time of writing, there are 74 different voices available. This includes 10 voices from Google in different languages and dialects as well as the many voices shipped with OS X. You know – the ones we all remember from our first experiments with the say command. The Google voices don't support setting rate or pitch but it works with the system voices.

Making your browser listen – Speech recognition

var recognition = new webkitSpeechRecognition();

recognition.lang = "en-US"; // "de-DE", "en-IN"

// Config (optional)

recognition.continuous = true;

recognition.interimResults = true;

recognition.addEventListener('result', function(event) {

console.log(event.results[0][0].transcript); // "whats the time"

console.log(event.results[0][0].confidence); // 0.93414807319641

});

recognition.addEventListener('end', function(event) {

// Start your action if not continuous

});

recognition.start();The block above starts the recognition. You will have to specify the language Chrome should listen for. With the continuous flag you define wether it should stop recognizing once an end of voice input is discovered or if it should listen continuously. The interimResults flag specifies if the result events should be fired multiple times or just once when the recognition of the current audio stream is finished. The interim results can be used to provide feedback in the UI while the user is still speaking.

The event object contains a final flag. It tells you when the recognition is completed. On top of the result list you get the latest result, while all the other interim results are found further down the list.

Demo

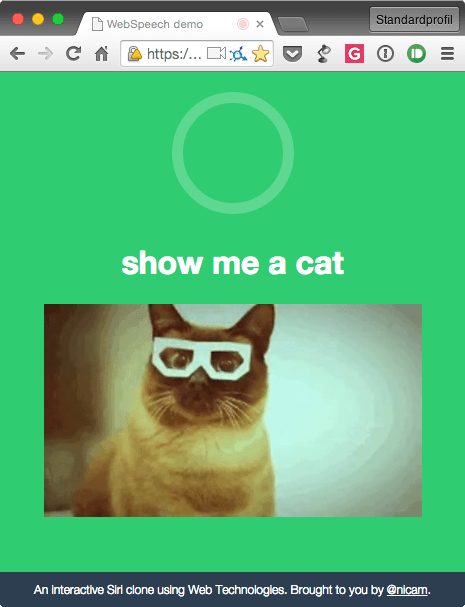

Using these APIs, a node.js backend and some help from WolframAlpha I built myself a first prototype of my personal assistant. You can check it out on heroku (give it some time to wake up the dino).

Some example questions are:

- What time is it?

- Whats 12 + 1?

- How big is earth?

- Whats 20€ in CHF?

- Show me a [cat, dog, anything that has a gif]

The demo code is available in my Github Repository.

Use it with caution

Even though those APIs are exhilarating to use, they are not perfect. The recognition will ask for permission to use the microphone. If you request the microphone on an SSL connection, it will remember the decision forever. If you request it on a regular HTTP connection, it will ask whenever you start a recognition. This is very counter intuitive if you want to build an interactive assistant that starts and stops the recognition on it's own.

Another issue is the synthetisation of longer texts. If your sentence is longer than 120 characters it will just stop speaking at some point and won't fire the end event either [1]. You'll need to restart your browser to restore the speech synthesiser. The workaround I used in the demo is to split longer texts into multiple utterances. This isn't perfect since there are small gaps between the playback of different utterances and the splitter has to take periods into account.

[1] Speech Synthesiser Bug: code.google.com/p/chromium/issues/detail