One of the more daunting tasks with a chatbot is analysing questions and answers that were not satisfying. What went wrong? What could have been done better? Is it retrieval? The data? The LLM? What could be improved? Ranking? Prompt? Sources? And so on. When you're running a RAG chatbot in production, these questions come up regularly, and going through them manually is tedious work that doesn't scale. Especially during the internal testing phase with lots of feedback, often (fortunately) at least with comments, what was wrong and should be better. A phase we always do with our clients and a very important one to get to the quality needed.

And I'm a big fan of Claude Code. It's currently my daily driver when it comes to AI-assisted coding.

So I thought, why not use an AI agent to help with this? Anthropic released the Claude Agent SDK, which gives you programmatic access to the same agent loop and tools that power Claude Code. You can build autonomous agents that can interact with your data and tools, do Web Search and Web Fetch, without having to implement the agent loop yourself. Perfect for this kind of task.

We then built some tools and skills around it to access our chatbot's data: conversation logs, retrieved chunks, source documents, user feedback, configured prompts, etc. Now the agent can analyse a single question, or all questions with bad feedback, and make suggestions on what went well, what went wrong and what could be improved. Directly in the LiipGPT Admin UI interface with a pretty standard Chatbot interface for every admin user to use without additional installation. You don't need to be a techie to be able to use it.

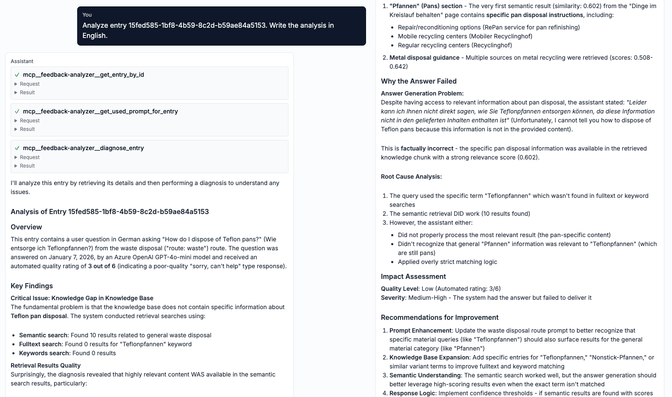

Here's an example of such an output (Full size image):

We could also have built something like this with for example LangGraph, which we use extensively in our chatbot stack. But the Claude Agent SDK has all the features built in already. Maybe too many actually, so take care of the permissions. Since this is not for a public chatbot but an internal analysis tool, we also don't need fine-grained control over everything. Basically more than good enough for the effort it needed. With great outcome to the problem at hand.

Now we can have an extended analysis of user questions in seconds, ask follow-up questions, do deeper analysis and improve the whole system with way less time and fewer repetitive tasks than before. The feedback is not always perfect, but sometimes also surprising and very insightful.

Costs can accumulate with such AI agents, especially when they're doing complex analysis with multiple tool calls. So we use the cheapest Anthropic model, Haiku, by default. For most analysis tasks, it's more than capable. We also display the weekly accumulated costs to keep it somehow under control. But the time saved is worth it anyway.

As far as I know, you could also run this with an open source LLM by changing the base URL in the SDK. Given our previous work on running ZüriCityGPT entirely on open source models, this is something we'd like to explore. Haven't tried it yet, though.

AI agents are a great fit for tasks like this: repetitive analysis work that requires context from multiple sources and some reasoning. The Claude Agent SDK made it easy to get started without building everything from scratch. If you have data that needs regular analysis and you're tired of doing it manually, this approach might be worth a try.