Discover what our agency can also do for you in regard to Technical SEO

You can have the world’s fanciest website, but if Google can’t crawl it and index it, then nobody will find you. The Liip SEO team often carries out SEO technical audits. One of the first things to check (and fix) on a website is crawling and indexing problems.

Difference between SEO Crawling and Indexing

Crawling is the process of discovering new or updated website pages. After crawling comes indexing. In this phase, Google tries to understand what the page is about (content, images, etc.). All the information is stored in the Google index.

Why isn't my website indexed?

If your pages are not indexed, it’s possible that Googlebot encountered a crawling or indexing problem.

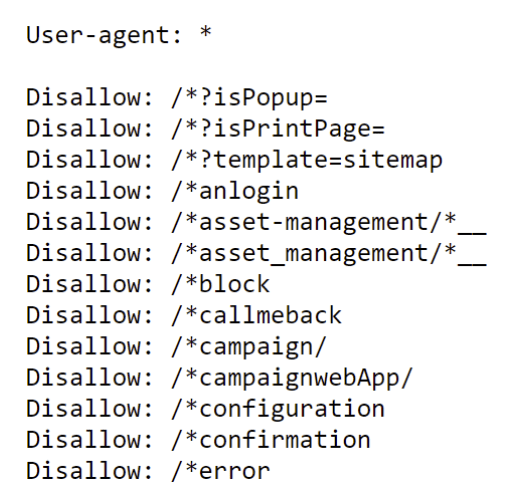

- Make sure that the robots.txt is not blocking the resources.Robots.txt instructs crawlers on how to crawl the website. As you can see from the image below, all web crawlers are advised to not crawl specific resources.

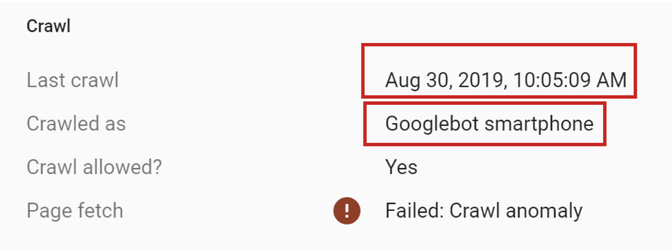

- Make sure that the WAF (web application firewall) is not blocking Googlebot. The goal of a WAF is to prevent web application attacks, and Googlebot can sometimes be interpreted by the WAF as an “enemy”. As a result, Googlebot is blocked before being able to crawl the website. To check if the WAF is generating issues, you need to view the coverage report in the search console. Check the last time that Googlebot crawled the website and compare it with the WAF information.

If there is a correlation, you will see it. It is possible that the problem is in the Googlebot header and that the WAF reads the header value as an SQL injection attack. Once you have understood the problem and corrected it, it’s time to test that everything runs smoothly. You can do so using the free version of Postman.

-

Make sure that important pages do not have a noindex meta tag. Noindex meta tags allow Googlebot to crawl the website but suggest to the bot to not index the page.

-

Make sure that you have no orphan pages. Orphan pages are pages with no link. Screaming Frog helps you find orphan pages and displays status code. A 200 status code means that the request was successful. Screaming Frog has a free version that crawls up to 500 URLs.

-

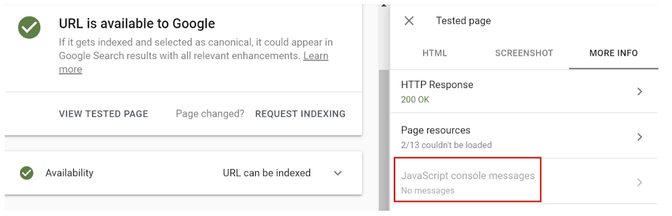

Make sure that Javascript is not a problem. In most cases, Google is able to index Javascript. It simply requires an extra step. Crawling-->indexing--->rendering. To make sure that Javascript is not a problem, you can view the URL inspect report in the search console. See image below:

How can I find out if my website has an indexing problem?

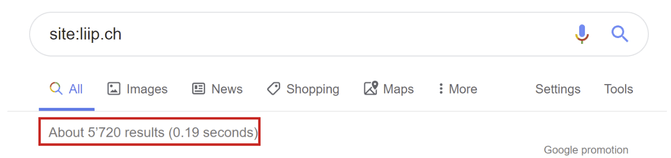

- The first thing to do is to use the expression site:domain.com and see if there is a discrepancy between the number of expected URLs that should be indexed and the indexed URLs. As an example of how to use the expression site, see the image below.

- Use the search console to discover crawling problems. For instance, if you submit a sitemap and there are crawling problems, Google will indicate them in the Coverage report.

- Select important pages and test the URLs in the URL inspection report in the search console. The Google search console provides relevant information such as if the crawler encountered a crawl anomaly.

(imageRokka: where-to-find-in-the-search-console-if-there-is-an-indexing-error.png alt: URL inspection report in the search console that provides information about crawl issue

- To make sure that the problem is real, it is also important to check the tested URLs on Google with the expression site:specific url.

How can I help Google indexing my website?

To help Google index your website, it’s important that all the above elements are fixed. Google must be able to understand what your page is about. For this reason, On-Page SEO is extremely important in facilitating indexing. To improve crawling you can use a sitemap and submit it to the Google search console.

What’s next?

Technical SEO is much more than just crawling and indexing. In the next SEO blog post, we will explain what a technical audit is and which elements are important. Reach out to us if you want to be notified when our guide is ready!

If your website is not ranking as you wish, contact our SEO experts for an audit:

In English, German and French.